Run larger neural networks in edge devices with powerful, power-efficient intelligence.

Fast, flexible, made for more complex (and more compelling) tasks

Ergo 2 provides the performance to handle more complex use cases, including those requiring transformer models, larger neural networks, multiple networks operating simultaneously and multimodal inputs.

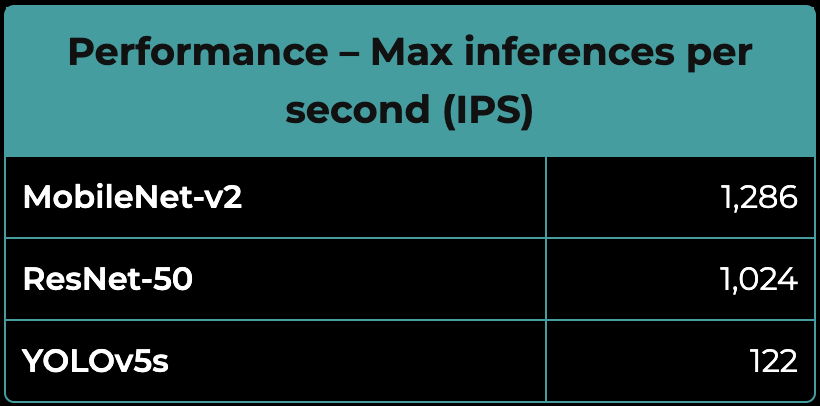

| Performance – Max inferences per second (IPS) | |

|---|---|

| MobileNet-v2 | 1,286 |

| ResNet-50 | 1,024 |

| YOLOv5s | 122 |

In addition to standard CNNs, RNNs, and LSTMs, Ergo 2 enables developers to leverage more powerful networks such as RoBERTa, GANs, and U-Nets, delivering responsive, accurate results, processed on-device for better power-efficiency, privacy, and security.

The Ergo 2 chip has been designed with a pipelined architecture and unified memory, which improve its flexibility and overall operating efficiency. As a result, it supports higher-resolution sensors and a wider range of applications, including:

- Language processing applications like speech-to-text and sentence completion

- Audio applications like acoustic echo cancellation and richer audio event detection

- Demanding video processing tasks like video super resolution and pose detection

Ergo 2 works with large networks, high frame rates, and multiple networks, enabling intelligent video and audio features for devices such as:

- Enterprise-grade cameras for security, access control, thermal imaging, or retail video analytics applications

- Industrial use cases including visual inspection

- Consumer products including laptops, tablets, and advanced wearables

Like its predecessor, Ergo 2 has a 7 mm by 7mm footprint and requires no external DRAM, making it well suited for use in compact devices.

Powerful, yet power-efficient

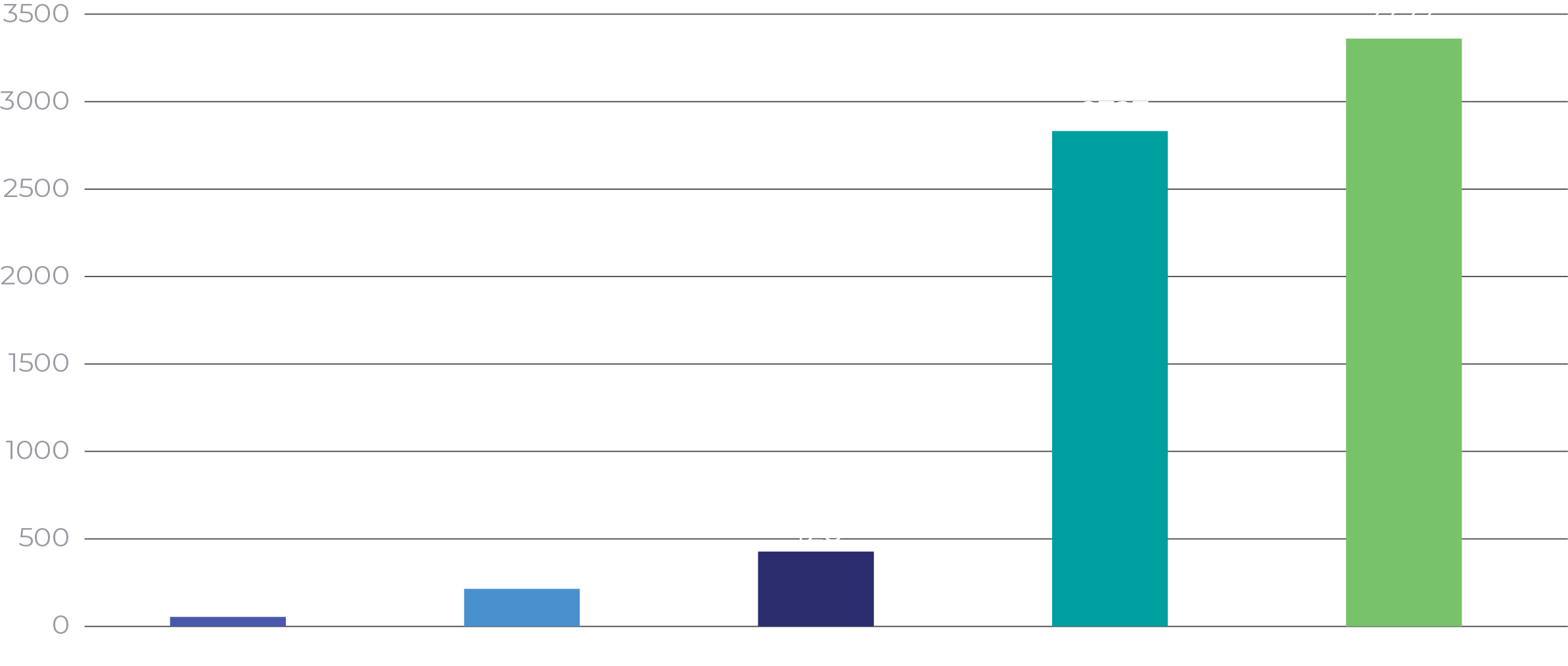

Ergo 2 offers significantly better power-efficiency than alternatives, running inferences on 30 fps video feeds in as little as 17 mW of compute power. This means it can operate within a variety of power-constrained devices and situations without external cooling, making it ideal for small devices and more versatile product packaging.

ResNet‑50 Power Efficiency (IPS/W)

Processing power to handle serious on-device ML features

Reducing or eliminating the need to send data to the cloud for inference reduces latency, improves power-efficiency, saves cloud costs and minimizes the opportunity for sensitive data to be intercepted.

Ergo 2 provides product developers with locally processed machine learning without trading away features or performance due to a lack of processing power or the power constraints of the device.

Development tools and model zoo to get you started quickly

Ergo 2 is supported by a full suite of development tools to ensure successful integration into your device. Perceive provides an ML toolchain that compresses and optimizes neural networks to run on Ergo 2, a software development kit for building embedded applications using Ergo 2, and a model zoo with example networks and applications to accelerate the development process.

Download the Product OverviewNVIDIA Jetson Xavier NX: https://developer.nvidia.com/embedded/jetson-benchmarks (Feb 27, 2022)

Qualcomm Snapdragon 888: https://www.qualcomm.com/content/dam/qcomm-martech/dm-assets/documents/tech_summit_2020-ai_-_jeff.pdf

Hailo Hailo-8: https://hailo.ai/developer-zone/benchmarks/